holoviews.data Package#

data Package#

- class holoviews.core.data.DataConversion(element)[source]#

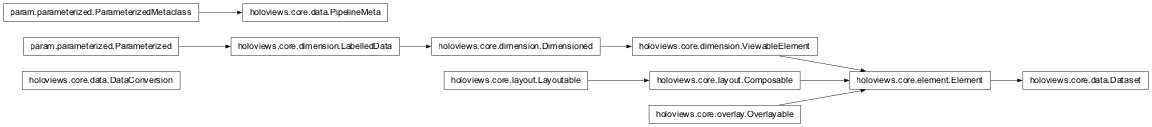

Bases:

objectDataConversion is a very simple container object which can be given an existing Dataset Element and provides methods to convert the Dataset into most other Element types.

- class holoviews.core.data.Dataset(data=None, kdims=None, vdims=None, **kwargs)[source]#

Bases:

ElementDataset provides a general baseclass for Element types that contain structured data and supports a range of data formats.

The Dataset class supports various methods offering a consistent way of working with the stored data regardless of the storage format used. These operations include indexing, selection and various ways of aggregating or collapsing the data with a supplied function.

Parameters inherited from:

holoviews.core.dimension.LabelledData: labelholoviews.core.dimension.Dimensioned: cdims, kdims, vdimsgroup= param.String(allow_refs=False, constant=True, default=’Dataset’, label=’Group’, nested_refs=False, rx=<param.reactive.reactive_ops object at 0x7f6b0f7353d0>)A string describing the data wrapped by the object.

datatype= param.List(allow_refs=False, bounds=(0, None), default=[‘dataframe’, ‘dictionary’, ‘grid’, ‘xarray’, ‘multitabular’, ‘spatialpandas’, ‘dask_spatialpandas’, ‘dask’, ‘cuDF’, ‘array’, ‘ibis’], label=’Datatype’, nested_refs=False, rx=<param.reactive.reactive_ops object at 0x7f6b0dd9d610>)A priority list of the data types to be used for storage on the .data attribute. If the input supplied to the element constructor cannot be put into the requested format, the next format listed will be used until a suitable format is found (or the data fails to be understood).

- add_dimension(dimension, dim_pos, dim_val, vdim=False, **kwargs)[source]#

Adds a dimension and its values to the Dataset

Requires the dimension name or object, the desired position in the key dimensions and a key value scalar or array of values, matching the length or shape of the Dataset.

- Args:

dimension: Dimension or dimension spec to add dim_pos (int): Integer index to insert dimension at dim_val (scalar or ndarray): Dimension value(s) to add vdim: Disabled, this type does not have value dimensions **kwargs: Keyword arguments passed to the cloned element

- Returns:

Cloned object containing the new dimension

- aggregate(dimensions=None, function=None, spreadfn=None, **kwargs)[source]#

Aggregates data on the supplied dimensions.

Aggregates over the supplied key dimensions with the defined function or dim_transform specified as a tuple of the transformed dimension name and dim transform.

- Args:

- dimensions: Dimension(s) to aggregate on

Default to all key dimensions

- function: Aggregation function or transform to apply

Supports both simple functions and dimension transforms

- spreadfn: Secondary reduction to compute value spread

Useful for computing a confidence interval, spread, or standard deviation.

- **kwargs: Keyword arguments either passed to the aggregation function

or to create new names for the transformed variables

- Returns:

Returns the aggregated Dataset

- array(dimensions=None)[source]#

Convert dimension values to columnar array.

- Args:

dimensions: List of dimensions to return

- Returns:

Array of columns corresponding to each dimension

- clone(data=None, shared_data=True, new_type=None, link=True, *args, **overrides)[source]#

Clones the object, overriding data and parameters.

- Args:

data: New data replacing the existing data shared_data (bool, optional): Whether to use existing data new_type (optional): Type to cast object to link (bool, optional): Whether clone should be linked

Determines whether Streams and Links attached to original object will be inherited.

*args: Additional arguments to pass to constructor **overrides: New keyword arguments to pass to constructor

- Returns:

Cloned object

- closest(coords=None, **kwargs)[source]#

Snaps coordinate(s) to closest coordinate in Dataset

- Args:

coords: List of coordinates expressed as tuples **kwargs: Coordinates defined as keyword pairs

- Returns:

List of tuples of the snapped coordinates

- Raises:

NotImplementedError: Raised if snapping is not supported

- columns(dimensions=None)[source]#

Convert dimension values to a dictionary.

Returns a dictionary of column arrays along each dimension of the element.

- Args:

dimensions: Dimensions to return as columns

- Returns:

Dictionary of arrays for each dimension

- compute()[source]#

Computes the data to a data format that stores the daata in memory, e.g. a Dask dataframe or array is converted to a Pandas DataFrame or NumPy array.

- Returns:

Dataset with the data stored in in-memory format

- property dataset#

The Dataset that this object was created from

- property ddims#

The list of deep dimensions

- dframe(dimensions=None, multi_index=False)[source]#

Convert dimension values to DataFrame.

Returns a pandas dataframe of columns along each dimension, either completely flat or indexed by key dimensions.

- Args:

dimensions: Dimensions to return as columns multi_index: Convert key dimensions to (multi-)index

- Returns:

DataFrame of columns corresponding to each dimension

- dimension_values(dimension, expanded=True, flat=True)[source]#

Return the values along the requested dimension.

- Args:

dimension: The dimension to return values for expanded (bool, optional): Whether to expand values

Whether to return the expanded values, behavior depends on the type of data:

Columnar: If false returns unique values

Geometry: If false returns scalar values per geometry

Gridded: If false returns 1D coordinates

flat (bool, optional): Whether to flatten array

- Returns:

NumPy array of values along the requested dimension

- dimensions(selection='all', label=False)[source]#

Lists the available dimensions on the object

Provides convenient access to Dimensions on nested Dimensioned objects. Dimensions can be selected by their type, i.e. ‘key’ or ‘value’ dimensions. By default ‘all’ dimensions are returned.

- Args:

- selection: Type of dimensions to return

The type of dimension, i.e. one of ‘key’, ‘value’, ‘constant’ or ‘all’.

- label: Whether to return the name, label or Dimension

Whether to return the Dimension objects (False), the Dimension names (True/’name’) or labels (‘label’).

- Returns:

List of Dimension objects or their names or labels

- get_dimension(dimension, default=None, strict=False)[source]#

Get a Dimension object by name or index.

- Args:

dimension: Dimension to look up by name or integer index default (optional): Value returned if Dimension not found strict (bool, optional): Raise a KeyError if not found

- Returns:

Dimension object for the requested dimension or default

- get_dimension_index(dimension)[source]#

Get the index of the requested dimension.

- Args:

dimension: Dimension to look up by name or by index

- Returns:

Integer index of the requested dimension

- get_dimension_type(dim)[source]#

Get the type of the requested dimension.

Type is determined by Dimension.type attribute or common type of the dimension values, otherwise None.

- Args:

dimension: Dimension to look up by name or by index

- Returns:

Declared type of values along the dimension

- groupby(dimensions=None, container_type=<class 'holoviews.core.spaces.HoloMap'>, group_type=None, dynamic=False, **kwargs)[source]#

Groups object by one or more dimensions

Applies groupby operation over the specified dimensions returning an object of type container_type (expected to be dictionary-like) containing the groups.

- Args:

dimensions: Dimension(s) to group by container_type: Type to cast group container to group_type: Type to cast each group to dynamic: Whether to return a DynamicMap **kwargs: Keyword arguments to pass to each group

- Returns:

Returns object of supplied container_type containing the groups. If dynamic=True returns a DynamicMap instead.

- hist(dimension=None, num_bins=20, bin_range=None, adjoin=True, **kwargs)[source]#

Computes and adjoins histogram along specified dimension(s).

Defaults to first value dimension if present otherwise falls back to first key dimension.

- Args:

dimension: Dimension(s) to compute histogram on num_bins (int, optional): Number of bins bin_range (tuple optional): Lower and upper bounds of bins adjoin (bool, optional): Whether to adjoin histogram

- Returns:

AdjointLayout of element and histogram or just the histogram

- property iloc#

Returns iloc indexer with support for columnar indexing.

Returns an iloc object providing a convenient interface to slice and index into the Dataset using row and column indices. Allow selection by integer index, slice and list of integer indices and boolean arrays.

Examples:

Index the first row and column:

dataset.iloc[0, 0]

Select rows 1 and 2 with a slice:

dataset.iloc[1:3, :]

Select with a list of integer coordinates:

dataset.iloc[[0, 2, 3]]

- map(map_fn, specs=None, clone=True)[source]#

Map a function to all objects matching the specs

Recursively replaces elements using a map function when the specs apply, by default applies to all objects, e.g. to apply the function to all contained Curve objects:

dmap.map(fn, hv.Curve)

- Args:

map_fn: Function to apply to each object specs: List of specs to match

List of types, functions or type[.group][.label] specs to select objects to return, by default applies to all objects.

clone: Whether to clone the object or transform inplace

- Returns:

Returns the object after the map_fn has been applied

- matches(spec)[source]#

Whether the spec applies to this object.

- Args:

- spec: A function, spec or type to check for a match

A ‘type[[.group].label]’ string which is compared against the type, group and label of this object

A function which is given the object and returns a boolean.

An object type matched using isinstance.

- Returns:

bool: Whether the spec matched this object.

- property ndloc#

Returns ndloc indexer with support for gridded indexing.

Returns an ndloc object providing nd-array like indexing for gridded datasets. Follows NumPy array indexing conventions, allowing for indexing, slicing and selecting a list of indices on multi-dimensional arrays using integer indices. The order of array indices is inverted relative to the Dataset key dimensions, e.g. an Image with key dimensions ‘x’ and ‘y’ can be indexed with

image.ndloc[iy, ix], whereiyandixare integer indices along the y and x dimensions.Examples:

Index value in 2D array:

dataset.ndloc[3, 1]

Slice along y-axis of 2D array:

dataset.ndloc[2:5, :]

Vectorized (non-orthogonal) indexing along x- and y-axes:

dataset.ndloc[[1, 2, 3], [0, 2, 3]]

- options(*args, clone=True, **kwargs)[source]#

Applies simplified option definition returning a new object.

Applies options on an object or nested group of objects in a flat format returning a new object with the options applied. If the options are to be set directly on the object a simple format may be used, e.g.:

obj.options(cmap=’viridis’, show_title=False)

If the object is nested the options must be qualified using a type[.group][.label] specification, e.g.:

obj.options(‘Image’, cmap=’viridis’, show_title=False)

or using:

obj.options({‘Image’: dict(cmap=’viridis’, show_title=False)})

Identical to the .opts method but returns a clone of the object by default.

- Args:

- *args: Sets of options to apply to object

Supports a number of formats including lists of Options objects, a type[.group][.label] followed by a set of keyword options to apply and a dictionary indexed by type[.group][.label] specs.

- backend (optional): Backend to apply options to

Defaults to current selected backend

- clone (bool, optional): Whether to clone object

Options can be applied inplace with clone=False

- **kwargs: Keywords of options

Set of options to apply to the object

- Returns:

Returns the cloned object with the options applied

- persist()[source]#

Persists the results of a lazy data interface to memory to speed up data manipulation and visualization. If the particular data backend already holds the data in memory this is a no-op. Unlike the compute method this maintains the same data type.

- Returns:

Dataset with the data persisted to memory

- property pipeline#

Chain operation that evaluates the sequence of operations that was used to create this object, starting with the Dataset stored in dataset property

- range(dim, data_range=True, dimension_range=True)[source]#

Return the lower and upper bounds of values along dimension.

- Args:

dimension: The dimension to compute the range on. data_range (bool): Compute range from data values dimension_range (bool): Include Dimension ranges

Whether to include Dimension range and soft_range in range calculation

- Returns:

Tuple containing the lower and upper bound

- reduce(dimensions=None, function=None, spreadfn=None, **reductions)[source]#

Applies reduction along the specified dimension(s).

Allows reducing the values along one or more key dimension with the supplied function. Supports two signatures:

Reducing with a list of dimensions, e.g.:

ds.reduce([‘x’], np.mean)

Defining a reduction using keywords, e.g.:

ds.reduce(x=np.mean)

- Args:

- dimensions: Dimension(s) to apply reduction on

Defaults to all key dimensions

function: Reduction operation to apply, e.g. numpy.mean spreadfn: Secondary reduction to compute value spread

Useful for computing a confidence interval, spread, or standard deviation.

- **reductions: Keyword argument defining reduction

Allows reduction to be defined as keyword pair of dimension and function

- Returns:

The Dataset after reductions have been applied.

- reindex(kdims=None, vdims=None)[source]#

Reindexes Dataset dropping static or supplied kdims

Creates a new object with a reordered or reduced set of key dimensions. By default drops all non-varying key dimensions.x

- Args:

kdims (optional): New list of key dimensionsx vdims (optional): New list of value dimensions

- Returns:

Reindexed object

- relabel(label=None, group=None, depth=0)[source]#

Clone object and apply new group and/or label.

Applies relabeling to children up to the supplied depth.

- Args:

label (str, optional): New label to apply to returned object group (str, optional): New group to apply to returned object depth (int, optional): Depth to which relabel will be applied

If applied to container allows applying relabeling to contained objects up to the specified depth

- Returns:

Returns relabelled object

- sample(samples=None, bounds=None, closest=True, **kwargs)[source]#

Samples values at supplied coordinates.

Allows sampling of element with a list of coordinates matching the key dimensions, returning a new object containing just the selected samples. Supports multiple signatures:

Sampling with a list of coordinates, e.g.:

ds.sample([(0, 0), (0.1, 0.2), …])

Sampling a range or grid of coordinates, e.g.:

1D: ds.sample(3) 2D: ds.sample((3, 3))

Sampling by keyword, e.g.:

ds.sample(x=0)

- Args:

samples: List of nd-coordinates to sample bounds: Bounds of the region to sample

Defined as two-tuple for 1D sampling and four-tuple for 2D sampling.

closest: Whether to snap to closest coordinates **kwargs: Coordinates specified as keyword pairs

Keywords of dimensions and scalar coordinates

- Returns:

Element containing the sampled coordinates

- select(selection_expr=None, selection_specs=None, **selection)[source]#

Applies selection by dimension name

Applies a selection along the dimensions of the object using keyword arguments. The selection may be narrowed to certain objects using selection_specs. For container objects the selection will be applied to all children as well.

Selections may select a specific value, slice or set of values:

- value: Scalar values will select rows along with an exact

match, e.g.:

ds.select(x=3)

- slice: Slices may be declared as tuples of the upper and

lower bound, e.g.:

ds.select(x=(0, 3))

- values: A list of values may be selected using a list or

set, e.g.:

ds.select(x=[0, 1, 2])

predicate expression: A holoviews.dim expression, e.g.:

from holoviews import dim ds.select(selection_expr=dim(‘x’) % 2 == 0)

- Args:

- selection_expr: holoviews.dim predicate expression

specifying selection.

- selection_specs: List of specs to match on

A list of types, functions, or type[.group][.label] strings specifying which objects to apply the selection on.

- **selection: Dictionary declaring selections by dimension

Selections can be scalar values, tuple ranges, lists of discrete values and boolean arrays

- Returns:

Returns an Dimensioned object containing the selected data or a scalar if a single value was selected

- property shape#

Returns the shape of the data.

- sort(by=None, reverse=False)[source]#

Sorts the data by the values along the supplied dimensions.

- Args:

by: Dimension(s) to sort by reverse (bool, optional): Reverse sort order

- Returns:

Sorted Dataset

- property to#

Returns the conversion interface with methods to convert Dataset

- transform(*args, **kwargs)[source]#

Transforms the Dataset according to a dimension transform.

Transforms may be supplied as tuples consisting of the dimension(s) and the dim transform to apply or keyword arguments mapping from dimension(s) to dim transforms. If the arg or kwarg declares multiple dimensions the dim transform should return a tuple of values for each.

A transform may override an existing dimension or add a new one in which case it will be added as an additional value dimension.

- Args:

- args: Specify the output arguments and transforms as a

tuple of dimension specs and dim transforms

drop (bool): Whether to drop all variables not part of the transform keep_index (bool): Whether to keep indexes

Whether to apply transform on datastructure with index, e.g. pandas.Series or xarray.DataArray, (important for dask datastructures where index may be required to align datasets).

kwargs: Specify new dimensions in the form new_dim=dim_transform

- Returns:

Transformed dataset with new dimensions

- traverse(fn=None, specs=None, full_breadth=True)[source]#

Traverses object returning matching items Traverses the set of children of the object, collecting the all objects matching the defined specs. Each object can be processed with the supplied function. Args:

fn (function, optional): Function applied to matched objects specs: List of specs to match

Specs must be types, functions or type[.group][.label] specs to select objects to return, by default applies to all objects.

- full_breadth: Whether to traverse all objects

Whether to traverse the full set of objects on each container or only the first.

- Returns:

list: List of objects that matched

- class holoviews.core.data.PipelineMeta(classname, bases, classdict)[source]#

Bases:

ParameterizedMetaclass- property abstract#

Return True if the class has an attribute __abstract set to True. Subclasses will return False unless they themselves have __abstract set to true. This mechanism allows a class to declare itself to be abstract (e.g. to avoid it being offered as an option in a GUI), without the “abstract” property being inherited by its subclasses (at least one of which is presumably not abstract).

- get_param_descriptor(param_name)[source]#

Goes up the class hierarchy (starting from the current class) looking for a Parameter class attribute param_name. As soon as one is found as a class attribute, that Parameter is returned along with the class in which it is declared.

- mro()#

Return a type’s method resolution order.

- holoviews.core.data.concat(datasets, datatype=None)[source]#

Concatenates collection of datasets along NdMapping dimensions.

Concatenates multiple datasets wrapped in an NdMapping type along all of its dimensions. Before concatenation all datasets are cast to the same datatype, which may be explicitly defined or implicitly derived from the first datatype that is encountered. For columnar data concatenation adds the columns for the dimensions being concatenated along and then concatenates all the old and new columns. For gridded data a new axis is created for each dimension being concatenated along and then hierarchically concatenates along each dimension.

- Args:

datasets: NdMapping of Datasets to concatenate datatype: Datatype to cast data to before concatenation

- Returns:

Concatenated dataset

array Module#

- class holoviews.core.data.array.ArrayInterface(*, name)[source]#

Bases:

Interface- classmethod applies(obj)[source]#

Indicates whether the interface is designed specifically to handle the supplied object’s type. By default simply checks if the object is one of the types declared on the class, however if the type is expensive to import at load time the method may be overridden.

- classmethod as_dframe(dataset)[source]#

Returns the data of a Dataset as a dataframe avoiding copying if it already a dataframe type.

- classmethod cast(datasets, datatype=None, cast_type=None)[source]#

Given a list of Dataset objects, cast them to the specified datatype (by default the format matching the current interface) with the given cast_type (if specified).

- classmethod concatenate(datasets, datatype=None, new_type=None)[source]#

Utility function to concatenate an NdMapping of Dataset objects.

- classmethod indexed(dataset, selection)[source]#

Given a Dataset object and selection to be applied returns boolean to indicate whether a scalar value has been indexed.

- classmethod isunique(dataset, dim, per_geom=False)[source]#

Compatibility method introduced for v1.13.0 to smooth over addition of per_geom kwarg for isscalar method.

cudf Module#

- class holoviews.core.data.cudf.cuDFInterface(*, name)[source]#

Bases:

PandasInterfaceThe cuDFInterface allows a Dataset objects to wrap a cuDF DataFrame object. Using cuDF allows working with columnar data on a GPU. Most operations leave the data in GPU memory, however to plot the data it has to be loaded into memory.

The cuDFInterface covers almost the complete API exposed by the PandasInterface with two notable exceptions:

Aggregation and groupby do not have a consistent sort order (see rapidsai/cudf#4237)

Not all functions can be easily applied to a cuDF so some functions applied with aggregate and reduce will not work.

- classmethod applies(obj)[source]#

Indicates whether the interface is designed specifically to handle the supplied object’s type. By default simply checks if the object is one of the types declared on the class, however if the type is expensive to import at load time the method may be overridden.

- classmethod as_dframe(dataset)[source]#

Returns the data of a Dataset as a dataframe avoiding copying if it already a dataframe type.

- classmethod cast(datasets, datatype=None, cast_type=None)[source]#

Given a list of Dataset objects, cast them to the specified datatype (by default the format matching the current interface) with the given cast_type (if specified).

- classmethod concatenate(datasets, datatype=None, new_type=None)[source]#

Utility function to concatenate an NdMapping of Dataset objects.

- classmethod indexed(dataset, selection)[source]#

Given a Dataset object and selection to be applied returns boolean to indicate whether a scalar value has been indexed.

- classmethod isunique(dataset, dim, per_geom=False)[source]#

Compatibility method introduced for v1.13.0 to smooth over addition of per_geom kwarg for isscalar method.

dask Module#

- class holoviews.core.data.dask.DaskInterface(*, name)[source]#

Bases:

PandasInterfaceThe DaskInterface allows a Dataset objects to wrap a dask DataFrame object. Using dask allows loading data lazily and performing out-of-core operations on the data, making it possible to work on datasets larger than memory.

The DaskInterface covers almost the complete API exposed by the PandasInterface with two notable exceptions:

Sorting is not supported and any attempt at sorting will be ignored with an warning.

Dask does not easily support adding a new column to an existing dataframe unless it is a scalar, add_dimension will therefore error when supplied a non-scalar value.

Not all functions can be easily applied to a dask dataframe so some functions applied with aggregate and reduce will not work.

- classmethod applies(obj)[source]#

Indicates whether the interface is designed specifically to handle the supplied object’s type. By default simply checks if the object is one of the types declared on the class, however if the type is expensive to import at load time the method may be overridden.

- classmethod as_dframe(dataset)[source]#

Returns the data of a Dataset as a dataframe avoiding copying if it already a dataframe type.

- classmethod cast(datasets, datatype=None, cast_type=None)[source]#

Given a list of Dataset objects, cast them to the specified datatype (by default the format matching the current interface) with the given cast_type (if specified).

- classmethod concatenate(datasets, datatype=None, new_type=None)[source]#

Utility function to concatenate an NdMapping of Dataset objects.

- classmethod iloc(dataset, index)[source]#

Dask does not support iloc, therefore iloc will execute the call graph and lose the laziness of the operation.

- classmethod indexed(dataset, selection)[source]#

Given a Dataset object and selection to be applied returns boolean to indicate whether a scalar value has been indexed.

- classmethod isunique(dataset, dim, per_geom=False)[source]#

Compatibility method introduced for v1.13.0 to smooth over addition of per_geom kwarg for isscalar method.

dictionary Module#

- class holoviews.core.data.dictionary.DictInterface(*, name)[source]#

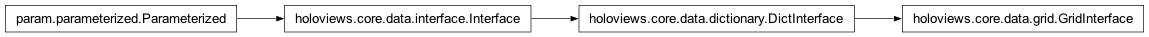

Bases:

InterfaceInterface for simple dictionary-based dataset format. The dictionary keys correspond to the column (i.e. dimension) names and the values are collections representing the values in that column.

- classmethod applies(obj)[source]#

Indicates whether the interface is designed specifically to handle the supplied object’s type. By default simply checks if the object is one of the types declared on the class, however if the type is expensive to import at load time the method may be overridden.

- classmethod as_dframe(dataset)[source]#

Returns the data of a Dataset as a dataframe avoiding copying if it already a dataframe type.

- classmethod cast(datasets, datatype=None, cast_type=None)[source]#

Given a list of Dataset objects, cast them to the specified datatype (by default the format matching the current interface) with the given cast_type (if specified).

- classmethod concatenate(datasets, datatype=None, new_type=None)[source]#

Utility function to concatenate an NdMapping of Dataset objects.

- classmethod indexed(dataset, selection)[source]#

Given a Dataset object and selection to be applied returns boolean to indicate whether a scalar value has been indexed.

- classmethod isunique(dataset, dim, per_geom=False)[source]#

Compatibility method introduced for v1.13.0 to smooth over addition of per_geom kwarg for isscalar method.

grid Module#

- class holoviews.core.data.grid.GridInterface(*, name)[source]#

Bases:

DictInterfaceInterface for simple dictionary-based dataset format using a compressed representation that uses the cartesian product between key dimensions. As with DictInterface, the dictionary keys correspond to the column (i.e. dimension) names and the values are NumPy arrays representing the values in that column.

To use this compressed format, the key dimensions must be orthogonal to one another with each key dimension specifying an axis of the multidimensional space occupied by the value dimension data. For instance, given an temperature recordings sampled regularly across the earth surface, a list of N unique latitudes and M unique longitudes can specify the position of NxM temperature samples.

- classmethod applies(obj)[source]#

Indicates whether the interface is designed specifically to handle the supplied object’s type. By default simply checks if the object is one of the types declared on the class, however if the type is expensive to import at load time the method may be overridden.

- classmethod as_dframe(dataset)[source]#

Returns the data of a Dataset as a dataframe avoiding copying if it already a dataframe type.

- classmethod canonicalize(dataset, data, data_coords=None, virtual_coords=None)[source]#

Canonicalize takes an array of values as input and reorients and transposes it to match the canonical format expected by plotting functions. In certain cases the dimensions defined via the kdims of an Element may not match the dimensions of the underlying data. A set of data_coords may be passed in to define the dimensionality of the data, which can then be used to np.squeeze the data to remove any constant dimensions. If the data is also irregular, i.e. contains multi-dimensional coordinates, a set of virtual_coords can be supplied, required by some interfaces (e.g. xarray) to index irregular datasets with a virtual integer index. This ensures these coordinates are not simply dropped.

- classmethod cast(datasets, datatype=None, cast_type=None)[source]#

Given a list of Dataset objects, cast them to the specified datatype (by default the format matching the current interface) with the given cast_type (if specified).

- classmethod concatenate(datasets, datatype=None, new_type=None)[source]#

Utility function to concatenate an NdMapping of Dataset objects.

- classmethod coords(dataset, dim, ordered=False, expanded=False, edges=False)[source]#

Returns the coordinates along a dimension. Ordered ensures coordinates are in ascending order and expanded creates ND-array matching the dimensionality of the dataset.

- classmethod indexed(dataset, selection)[source]#

Given a Dataset object and selection to be applied returns boolean to indicate whether a scalar value has been indexed.

- classmethod isunique(dataset, dim, per_geom=False)[source]#

Compatibility method introduced for v1.13.0 to smooth over addition of per_geom kwarg for isscalar method.

- classmethod sample(dataset, samples=None)[source]#

Samples the gridded data into dataset of samples.

ibis Module#

- class holoviews.core.data.ibis.IbisInterface(*, name)[source]#

Bases:

Interface- classmethod applies(obj)[source]#

Indicates whether the interface is designed specifically to handle the supplied object’s type. By default simply checks if the object is one of the types declared on the class, however if the type is expensive to import at load time the method may be overridden.

- classmethod as_dframe(dataset)[source]#

Returns the data of a Dataset as a dataframe avoiding copying if it already a dataframe type.

- classmethod cast(datasets, datatype=None, cast_type=None)[source]#

Given a list of Dataset objects, cast them to the specified datatype (by default the format matching the current interface) with the given cast_type (if specified).

- classmethod concatenate(datasets, datatype=None, new_type=None)[source]#

Utility function to concatenate an NdMapping of Dataset objects.

- classmethod indexed(dataset, selection)[source]#

Given a Dataset object and selection to be applied returns boolean to indicate whether a scalar value has been indexed.

- classmethod isunique(dataset, dim, per_geom=False)[source]#

Compatibility method introduced for v1.13.0 to smooth over addition of per_geom kwarg for isscalar method.

image Module#

- class holoviews.core.data.image.ImageInterface(*, name)[source]#

Bases:

GridInterfaceInterface for 2 or 3D arrays representing images of raw luminance values, RGB values or HSV values.

- classmethod applies(obj)[source]#

Indicates whether the interface is designed specifically to handle the supplied object’s type. By default simply checks if the object is one of the types declared on the class, however if the type is expensive to import at load time the method may be overridden.

- classmethod as_dframe(dataset)[source]#

Returns the data of a Dataset as a dataframe avoiding copying if it already a dataframe type.

- classmethod canonicalize(dataset, data, data_coords=None, virtual_coords=None)[source]#

Canonicalize takes an array of values as input and reorients and transposes it to match the canonical format expected by plotting functions. In certain cases the dimensions defined via the kdims of an Element may not match the dimensions of the underlying data. A set of data_coords may be passed in to define the dimensionality of the data, which can then be used to np.squeeze the data to remove any constant dimensions. If the data is also irregular, i.e. contains multi-dimensional coordinates, a set of virtual_coords can be supplied, required by some interfaces (e.g. xarray) to index irregular datasets with a virtual integer index. This ensures these coordinates are not simply dropped.

- classmethod cast(datasets, datatype=None, cast_type=None)[source]#

Given a list of Dataset objects, cast them to the specified datatype (by default the format matching the current interface) with the given cast_type (if specified).

- classmethod concatenate(datasets, datatype=None, new_type=None)[source]#

Utility function to concatenate an NdMapping of Dataset objects.

- classmethod coords(dataset, dim, ordered=False, expanded=False, edges=False)[source]#

Returns the coordinates along a dimension. Ordered ensures coordinates are in ascending order and expanded creates ND-array matching the dimensionality of the dataset.

- classmethod indexed(dataset, selection)[source]#

Given a Dataset object and selection to be applied returns boolean to indicate whether a scalar value has been indexed.

- classmethod isunique(dataset, dim, per_geom=False)[source]#

Compatibility method introduced for v1.13.0 to smooth over addition of per_geom kwarg for isscalar method.

- classmethod sample(dataset, samples=None)[source]#

Sample the Raster along one or both of its dimensions, returning a reduced dimensionality type, which is either a ItemTable, Curve or Scatter. If two dimension samples and a new_xaxis is provided the sample will be the value of the sampled unit indexed by the value in the new_xaxis tuple.

- classmethod select(dataset, selection_mask=None, **selection)[source]#

Slice the underlying numpy array in sheet coordinates.

- classmethod select_mask(dataset, selection)[source]#

Given a Dataset object and a dictionary with dimension keys and selection keys (i.e. tuple ranges, slices, sets, lists, or literals) return a boolean mask over the rows in the Dataset object that have been selected.

interface Module#

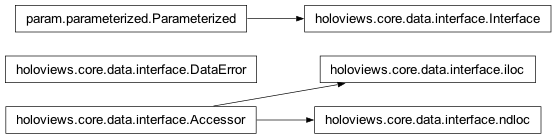

- exception holoviews.core.data.interface.DataError(msg, interface=None)[source]#

Bases:

ValueErrorDataError is raised when the data cannot be interpreted

- add_note()#

Exception.add_note(note) – add a note to the exception

- with_traceback()#

Exception.with_traceback(tb) – set self.__traceback__ to tb and return self.

- class holoviews.core.data.interface.Interface(*, name)[source]#

Bases:

Parameterized- classmethod applies(obj)[source]#

Indicates whether the interface is designed specifically to handle the supplied object’s type. By default simply checks if the object is one of the types declared on the class, however if the type is expensive to import at load time the method may be overridden.

- classmethod as_dframe(dataset)[source]#

Returns the data of a Dataset as a dataframe avoiding copying if it already a dataframe type.

- classmethod cast(datasets, datatype=None, cast_type=None)[source]#

Given a list of Dataset objects, cast them to the specified datatype (by default the format matching the current interface) with the given cast_type (if specified).

- classmethod concatenate(datasets, datatype=None, new_type=None)[source]#

Utility function to concatenate an NdMapping of Dataset objects.

- classmethod indexed(dataset, selection)[source]#

Given a Dataset object and selection to be applied returns boolean to indicate whether a scalar value has been indexed.

- class holoviews.core.data.interface.iloc(dataset)[source]#

Bases:

Accessoriloc is small wrapper object that allows row, column based indexing into a Dataset using the

.ilocproperty. It supports the usual numpy and pandas iloc indexing semantics including integer indices, slices, lists and arrays of values. For more information see theDataset.ilocproperty docstring.

- class holoviews.core.data.interface.ndloc(dataset)[source]#

Bases:

Accessorndloc is a small wrapper object that allows ndarray-like indexing for gridded Datasets using the

.ndlocproperty. It supports the standard NumPy ndarray indexing semantics including integer indices, slices, lists and arrays of values. For more information see theDataset.ndlocproperty docstring.

multipath Module#

- class holoviews.core.data.multipath.MultiInterface(*, name)[source]#

Bases:

InterfaceMultiInterface allows wrapping around a list of tabular datasets including dataframes, the columnar dictionary format or 2D tabular NumPy arrays. Using the split method the list of tabular data can be split into individual datasets.

The interface makes the data appear a list of tabular datasets as a single dataset. The interface may be used to represent geometries so the behavior depends on the type of geometry being represented.

- classmethod applies(obj)[source]#

Indicates whether the interface is designed specifically to handle the supplied object’s type. By default simply checks if the object is one of the types declared on the class, however if the type is expensive to import at load time the method may be overridden.

- classmethod as_dframe(dataset)[source]#

Returns the data of a Dataset as a dataframe avoiding copying if it already a dataframe type.

- classmethod cast(datasets, datatype=None, cast_type=None)[source]#

Given a list of Dataset objects, cast them to the specified datatype (by default the format matching the current interface) with the given cast_type (if specified).

- classmethod concatenate(datasets, datatype=None, new_type=None)[source]#

Utility function to concatenate an NdMapping of Dataset objects.

- classmethod indexed(dataset, selection)[source]#

Given a Dataset object and selection to be applied returns boolean to indicate whether a scalar value has been indexed.

- classmethod isscalar(dataset, dim, per_geom=False)[source]#

Tests if dimension is scalar in each subpath.

- classmethod isunique(dataset, dim, per_geom=False)[source]#

Compatibility method introduced for v1.13.0 to smooth over addition of per_geom kwarg for isscalar method.

- classmethod length(dataset)[source]#

Returns the length of the multi-tabular dataset making it appear like a single array of concatenated subpaths separated by NaN values.

- classmethod select(dataset, selection_mask=None, **selection)[source]#

Applies selectiong on all the subpaths.

- classmethod select_mask(dataset, selection)[source]#

Given a Dataset object and a dictionary with dimension keys and selection keys (i.e. tuple ranges, slices, sets, lists, or literals) return a boolean mask over the rows in the Dataset object that have been selected.

- classmethod select_paths(dataset, index)[source]#

Allows selecting paths with usual NumPy slicing index.

- classmethod shape(dataset)[source]#

Returns the shape of all subpaths, making it appear like a single array of concatenated subpaths separated by NaN values.

- holoviews.core.data.multipath.ensure_ring(geom, values=None)[source]#

Ensure the (multi-)geometry forms a ring.

Checks the start- and end-point of each geometry to ensure they form a ring, if not the start point is inserted at the end point. If a values array is provided (which must match the geometry in length) then the insertion will occur on the values instead, ensuring that they will match the ring geometry.

- Args:

geom: 2-D array of geometry coordinates values: Optional array of values

- Returns:

Array where values have been inserted and ring closing indexes

pandas Module#

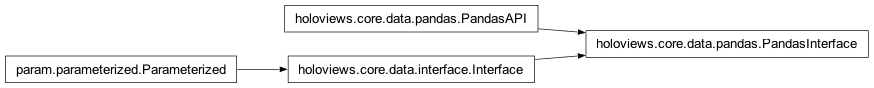

- class holoviews.core.data.pandas.PandasAPI[source]#

Bases:

objectThis class is used to describe the interface as having a pandas-like API.

The reason to have this class is that it is not always possible to directly inherit from the PandasInterface.

- This class should not have any logic as it should be used like:

- if issubclass(interface, PandasAPI):

…

- class holoviews.core.data.pandas.PandasInterface(*, name)[source]#

-

- classmethod applies(obj)[source]#

Indicates whether the interface is designed specifically to handle the supplied object’s type. By default simply checks if the object is one of the types declared on the class, however if the type is expensive to import at load time the method may be overridden.

- classmethod as_dframe(dataset)[source]#

Returns the data of a Dataset as a dataframe avoiding copying if it already a dataframe type.

- classmethod cast(datasets, datatype=None, cast_type=None)[source]#

Given a list of Dataset objects, cast them to the specified datatype (by default the format matching the current interface) with the given cast_type (if specified).

- classmethod concatenate(datasets, datatype=None, new_type=None)[source]#

Utility function to concatenate an NdMapping of Dataset objects.

- classmethod indexed(dataset, selection)[source]#

Given a Dataset object and selection to be applied returns boolean to indicate whether a scalar value has been indexed.

- classmethod isunique(dataset, dim, per_geom=False)[source]#

Compatibility method introduced for v1.13.0 to smooth over addition of per_geom kwarg for isscalar method.

spatialpandas Module#

- class holoviews.core.data.spatialpandas.SpatialPandasInterface(*, name)[source]#

Bases:

MultiInterface- classmethod applies(obj)[source]#

Indicates whether the interface is designed specifically to handle the supplied object’s type. By default simply checks if the object is one of the types declared on the class, however if the type is expensive to import at load time the method may be overridden.

- classmethod as_dframe(dataset)[source]#

Returns the data of a Dataset as a dataframe avoiding copying if it already a dataframe type.

- base_interface[source]#

alias of

PandasInterface

- classmethod cast(datasets, datatype=None, cast_type=None)[source]#

Given a list of Dataset objects, cast them to the specified datatype (by default the format matching the current interface) with the given cast_type (if specified).

- classmethod concatenate(datasets, datatype=None, new_type=None)[source]#

Utility function to concatenate an NdMapping of Dataset objects.

- classmethod indexed(dataset, selection)[source]#

Given a Dataset object and selection to be applied returns boolean to indicate whether a scalar value has been indexed.

- classmethod isscalar(dataset, dim, per_geom=False)[source]#

Tests if dimension is scalar in each subpath.

- classmethod isunique(dataset, dim, per_geom=False)[source]#

Compatibility method introduced for v1.13.0 to smooth over addition of per_geom kwarg for isscalar method.

- classmethod length(dataset)[source]#

Returns the length of the multi-tabular dataset making it appear like a single array of concatenated subpaths separated by NaN values.

- classmethod select(dataset, selection_mask=None, **selection)[source]#

Applies selectiong on all the subpaths.

- classmethod select_mask(dataset, selection)[source]#

Given a Dataset object and a dictionary with dimension keys and selection keys (i.e. tuple ranges, slices, sets, lists, or literals) return a boolean mask over the rows in the Dataset object that have been selected.

- classmethod select_paths(dataset, index)[source]#

Allows selecting paths with usual NumPy slicing index.

- classmethod shape(dataset)[source]#

Returns the shape of all subpaths, making it appear like a single array of concatenated subpaths separated by NaN values.

- holoviews.core.data.spatialpandas.from_multi(eltype, data, kdims, vdims)[source]#

Converts list formats into spatialpandas.GeoDataFrame.

- Args:

eltype: Element type to convert data: The original data kdims: The declared key dimensions vdims: The declared value dimensions

- Returns:

A GeoDataFrame containing in the list based format.

- holoviews.core.data.spatialpandas.from_shapely(data)[source]#

Converts shapely based data formats to spatialpandas.GeoDataFrame.

- Args:

- data: A list of shapely objects or dictionaries containing

shapely objects

- Returns:

A GeoDataFrame containing the shapely geometry data.

- holoviews.core.data.spatialpandas.geom_array_to_array(geom_array, index, expand=False, geom_type=None)[source]#

Converts spatialpandas extension arrays to a flattened array.

- Args:

geom: spatialpandas geometry index: The column index to return

- Returns:

Flattened array

- holoviews.core.data.spatialpandas.geom_to_array(geom, index=None, multi=False, geom_type=None)[source]#

Converts spatialpandas geometry to an array.

- Args:

geom: spatialpandas geometry index: The column index to return multi: Whether to concatenate multiple arrays or not

- Returns:

Array or list of arrays.

- holoviews.core.data.spatialpandas.geom_to_holes(geom)[source]#

Extracts holes from spatialpandas Polygon geometries.

- Args:

geom: spatialpandas geometry

- Returns:

List of arrays representing holes

- holoviews.core.data.spatialpandas.get_geom_type(gdf, col)[source]#

Return the HoloViews geometry type string for the geometry column.

- Args:

gdf: The GeoDataFrame to get the geometry from col: The geometry column

- Returns:

A string representing the type of geometry

- holoviews.core.data.spatialpandas.get_value_array(data, dimension, expanded, keep_index, geom_col, is_points, geom_length=<function geom_length>)[source]#

Returns an array of values from a GeoDataFrame.

- Args:

data: GeoDataFrame dimension: The dimension to get the values from expanded: Whether to expand the value array keep_index: Whether to return a Series geom_col: The column in the data that contains the geometries is_points: Whether the geometries are points geom_length: The function used to compute the length of each geometry

- Returns:

An array containing the values along a dimension

- holoviews.core.data.spatialpandas.to_geom_dict(eltype, data, kdims, vdims, interface=None)[source]#

Converts data from any list format to a dictionary based format.

- Args:

eltype: Element type to convert data: The original data kdims: The declared key dimensions vdims: The declared value dimensions

- Returns:

A list of dictionaries containing geometry coordinates and values.

- holoviews.core.data.spatialpandas.to_spatialpandas(data, xdim, ydim, columns=None, geom='point')[source]#

Converts list of dictionary format geometries to spatialpandas line geometries.

- Args:

data: List of dictionaries representing individual geometries xdim: Name of x-coordinates column ydim: Name of y-coordinates column columns: List of columns to add geom: The type of geometry

- Returns:

A spatialpandas.GeoDataFrame version of the data

spatialpandas_dask Module#

- class holoviews.core.data.spatialpandas_dask.DaskSpatialPandasInterface(*, name)[source]#

Bases:

SpatialPandasInterface- classmethod applies(obj)[source]#

Indicates whether the interface is designed specifically to handle the supplied object’s type. By default simply checks if the object is one of the types declared on the class, however if the type is expensive to import at load time the method may be overridden.

- classmethod as_dframe(dataset)[source]#

Returns the data of a Dataset as a dataframe avoiding copying if it already a dataframe type.

- base_interface[source]#

alias of

DaskInterface

- classmethod cast(datasets, datatype=None, cast_type=None)[source]#

Given a list of Dataset objects, cast them to the specified datatype (by default the format matching the current interface) with the given cast_type (if specified).

- classmethod concatenate(datasets, datatype=None, new_type=None)[source]#

Utility function to concatenate an NdMapping of Dataset objects.

- classmethod indexed(dataset, selection)[source]#

Given a Dataset object and selection to be applied returns boolean to indicate whether a scalar value has been indexed.

- classmethod isscalar(dataset, dim, per_geom=False)[source]#

Tests if dimension is scalar in each subpath.

- classmethod isunique(dataset, dim, per_geom=False)[source]#

Compatibility method introduced for v1.13.0 to smooth over addition of per_geom kwarg for isscalar method.

- classmethod length(dataset)[source]#

Returns the length of the multi-tabular dataset making it appear like a single array of concatenated subpaths separated by NaN values.

- classmethod select(dataset, selection_mask=None, **selection)[source]#

Applies selectiong on all the subpaths.

- classmethod select_mask(dataset, selection)[source]#

Given a Dataset object and a dictionary with dimension keys and selection keys (i.e. tuple ranges, slices, sets, lists, or literals) return a boolean mask over the rows in the Dataset object that have been selected.

- classmethod select_paths(dataset, index)[source]#

Allows selecting paths with usual NumPy slicing index.

- classmethod shape(dataset)[source]#

Returns the shape of all subpaths, making it appear like a single array of concatenated subpaths separated by NaN values.

util Module#

xarray Module#

- class holoviews.core.data.xarray.XArrayInterface(*, name)[source]#

Bases:

GridInterface- classmethod applies(obj)[source]#

Indicates whether the interface is designed specifically to handle the supplied object’s type. By default simply checks if the object is one of the types declared on the class, however if the type is expensive to import at load time the method may be overridden.

- classmethod as_dframe(dataset)[source]#

Returns the data of a Dataset as a dataframe avoiding copying if it already a dataframe type.

- classmethod canonicalize(dataset, data, data_coords=None, virtual_coords=None)[source]#

Canonicalize takes an array of values as input and reorients and transposes it to match the canonical format expected by plotting functions. In certain cases the dimensions defined via the kdims of an Element may not match the dimensions of the underlying data. A set of data_coords may be passed in to define the dimensionality of the data, which can then be used to np.squeeze the data to remove any constant dimensions. If the data is also irregular, i.e. contains multi-dimensional coordinates, a set of virtual_coords can be supplied, required by some interfaces (e.g. xarray) to index irregular datasets with a virtual integer index. This ensures these coordinates are not simply dropped.

- classmethod cast(datasets, datatype=None, cast_type=None)[source]#

Given a list of Dataset objects, cast them to the specified datatype (by default the format matching the current interface) with the given cast_type (if specified).

- classmethod concatenate(datasets, datatype=None, new_type=None)[source]#

Utility function to concatenate an NdMapping of Dataset objects.

- classmethod coords(dataset, dimension, ordered=False, expanded=False, edges=False)[source]#

Returns the coordinates along a dimension. Ordered ensures coordinates are in ascending order and expanded creates ND-array matching the dimensionality of the dataset.

- classmethod indexed(dataset, selection)[source]#

Given a Dataset object and selection to be applied returns boolean to indicate whether a scalar value has been indexed.

- classmethod isunique(dataset, dim, per_geom=False)[source]#

Compatibility method introduced for v1.13.0 to smooth over addition of per_geom kwarg for isscalar method.

- classmethod sample(dataset, samples=None)[source]#

Samples the gridded data into dataset of samples.